Scientific Rascality & Wisdom

Alan Watts, Ernest Becker and William Occam all Walk into a Bar | {Pessimistic Meta-Induction Theory Explored}

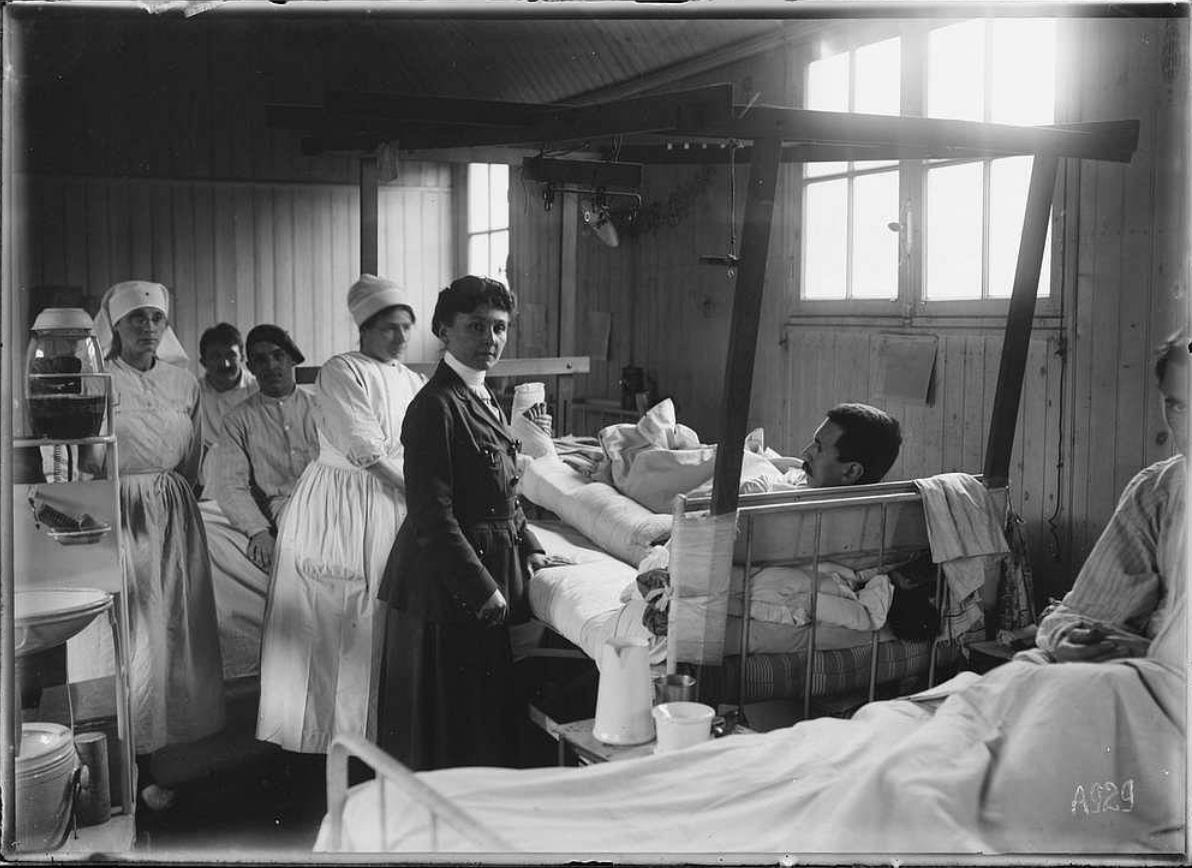

Imagine yourself as a doctor in Paris, circa 1850. You're among the finest physicians of your age, trained in the most prestigious medical schools, and absolutely certain that bloodletting is the correct treatment for pneumonia. Your certainty isn't just a matter of tradition – you've seen it work with your own eyes. Or at least, you think you have.

Fast forward to today, and we know that bloodletting probably killed more patients than it saved. But here's the truly unsettling question: what are we equally certain about today that future generations will view with the same horrified fascination?And deeper still, what if this perpetual overturning of certainty isn't a bug in our scientific understanding, but a feature of reality itself?

This is the essence of the pessimistic meta-induction theory, and it might be one of the most important ideas you've never heard of. It's a philosophical hand grenade that, once thrown, explodes our comfortable assumptions about scientific progress. The theory suggests something both simple and devastating: if most scientific theories from the past eventually turned out to be wrong, we should assume many of our current theories will suffer the same fate. This is where the pessimistic meta-induction theory collides with what philosopher Alan Watts called the "irreducible rascality" of existence – the notion that at the bottom of every system, every certainty, and every scientific truth lies a fundamental instability that cannot be reasoned away. It's as if the universe itself has a sense of humor, placing a cosmic joker card at the foundation of our most cherished theories.

Think about that for a moment. Every age has had its unshakeable scientific certainties. Medieval physicians were absolutely convinced that an imbalance of bodily humors caused illnesses. Victorian doctors would stake their professional reputations on the dangers of female bicycle riding. And let's not forget the more recent past—the 1950s doctors who recommended cigarettes for stress relief or the 1980s nutritionists who declared war on all dietary fat. These weren't fringe ideas—they represented the scientific consensus of their time. And in each case, that little rascal of uncertainty was waiting patiently to upend everything.

But surely we're different now? Our science is better, our methods more rigorous, and our understanding is deeper. This is what every generation has told itself, and every generation has been partly wrong. It's a bad joke we can only appreciate in retrospect. Consider modern medicine's relationship with depression. We're currently certain that it's primarily a problem with brain chemistry, treatable with drugs that adjust serotonin levels. But what if future generations look back at our SSRI prescriptions the way we look back at bloodletting? What if they discover that we've been focusing on a downstream effect rather than a root cause? What if, as Watts might suggest, the very attempt to pin down consciousness and mental health to a purely chemical phenomenon is itself a manifestation of our resistance to the fundamental rascality of existence?

Our attachment to scientific beliefs often transcends the realm of pure intellectual discourse. When we stake our identity on medical theories or wellness philosophies, proving them wrong becomes more than a matter of updating knowledge – it becomes an existential threat. This is where Ernest Becker's insights into death anxiety intersect with Watts' irreducible rascality in fascinating ways. Just as we construct elaborate systems to deny physical death, we build intricate psychological defenses to avoid the ‘symbolic death’ of being wrong. Yet perhaps the greatest wisdom lies in embracing this very instability.

Our attachment of ego to belief systems creates a peculiar psychological trap: we begin to treat our beliefs not as provisional hypotheses about reality, but as fundamental components of our identity. When we stake our sense of self-worth on being "right," we transform the natural process of learning through error into an existential threat. Being wrong about a scientific theory or medical practice becomes equivalent to being a bad person, creating a defensive posture that makes genuine learning nearly impossible. This is perhaps one of the clearest examples of how our denial of death manifests in seemingly unrelated areas of life – we fight so hard against being wrong because each admission of error feels like a small death of the self.

The solution lies in consciously separating our ego from our belief systems, treating our ideas as what they truly are: provisional hypotheses about an endlessly surprising universe. When we can say "I was wrong, and now I'm a better person for having learned something new," we transform the terror of error into the joy of discovery. This is where Watts' irreducible rascality meets Becker's insights about death anxiety – by embracing the fundamental playfulness of existence, we can hold our beliefs more lightly, viewing them as tools for navigation rather than monuments to our own significance. The scientific method itself, with its emphasis on falsifiability and revision, becomes not just a methodology but a spiritual practice in letting go of the ego's need for permanent rightness. (Great Podcast on Ernest Becker)

Consider how physicians respond when new evidence challenges long-held medical practices. The resistance often goes beyond professional conservatism; it becomes personal, almost visceral. A doctor who has spent decades prescribing a particular treatment isn't just defending a medical protocol—they're defending their identity, their life's work, their sense of self. Being wrong about such fundamental aspects of their practice threatens a kind of professional death, a dissolution of the ego structure they've built around their expertise.

“Doubt is an uncomfortable condition, but certainty is a ridiculous one.”

― Voltaire

This ego attachment to belief systems explains why scientific revolutions, as Thomas Kuhn noted, often have to wait for a generation of practitioners to retire. The emotional cost of admitting fundamental error is too high. We've linked our professional identities, our sense of competence, and our very worth to being "right." Yet the pessimistic meta-induction theory suggests that being wrong isn't the exception—it's the rule. Our current medical certainties are likely just weigh stations on a longer journey toward understanding.

The solution isn't to abandon expertise or stop forming strong opinions. Rather, it's to develop a more sophisticated relationship with wrongness itself, what we might call ‘rascality wisdom’ - an approach to knowledge that combines rigorous methodology with a playful acceptance of uncertainty. Imagine approaching medical knowledge like a cartographer from the age of exploration: each map you draw represents your best current understanding, but you expect—even hope — that future explorers will improve upon your work. Being "wrong" in this context isn't a catastrophic ego death; it's a necessary step in the advancement of knowledge.

Good Read: Being Wrong - Adventures in the Margin of Error

The process of changing one's mind should be a joy, not an angry argument.

This perspective allows us to hold strong views weakly. A surgeon can be absolutely confident in current best practices while maintaining the psychological flexibility to adapt when evidence demands change. A researcher can pursue a hypothesis with passion while remaining genuinely open to its refutation. This isn't relativism—its epistemological maturity seasoned with a dash of cosmic humor.

The true mark of scientific wisdom, then, might not be the confidence with which we hold our beliefs, but the grace with which we modify them. Each time we surrender an outdated understanding, we experience a small symbolic death—and each such death makes us more resilient, more adaptable, more aligned with the true nature of scientific progress. When we confront our impermanence and insignificance by embracing these little deaths, we paradoxically become more alive to the endless possibilities of discovery.

Enter Occam's Razor, the elegantly simple principle that has guided scientific thinking for centuries: among competing explanations, choose the one that makes the fewest assumptions. In our age of information overflow and competing medical claims, this medieval philosophical tool has become surprisingly relevant. Think of it as a mental machete for cutting through the jungle of complexity that surrounds modern medical claims and consumer health products. When a wellness influencer claims their supplement can boost your immune system, balance your hormones, and detoxify your body, Occam's Razor suggests we should first consider simpler explanations: could adequate sleep, balanced nutrition, and regular exercise explain the same benefits? Or, more frightening, did that supplement you bought on Amazon have MDMA in it (true story)? We have a superpower: curiosity and the ability to think critically beyond what we're told and sold.

Among competing hypotheses, the one with the fewest assumptions should be selected.

When you hear hoofbeats, think of horses, not zebras.

As we stand on the brink of what may be the most dramatic medical innovations in human history—from gene therapy to artificial intelligence-driven diagnostics—this framework becomes more relevant than ever. The pace of scientific discovery is speeding up, but so too is the spread of misinformation and overblown claims. Perhaps the wisest response is to embrace both the rigor of scientific methodology and the humility to recognize that at the bottom of all our theories, that little rascal of uncertainty is waiting with a grin.

Consider the modern wellness industry, a $4.5 trillion global market where scientific claims mix freely with ancient wisdom and contemporary marketing. Here's where we need to train a new kind of consumer—one who can think critically about health claims while remaining open to genuine innovation. Imagine a world where the average person approaches health claims with the same skepticism they bring to buying a used car. They would walk into that negotiation wanted a perponderance of evidence that they were not getting a lemon and there were guarantees after purchase. The majority of modern healthcare consumers blindly trusts the podcaster and just ‘buy’.

One should naturally ask what the simplest explanation for claimed benefits is, examine who funded the research, and consider how new claims fit with well-established scientific principles.

This kind of consumer education needs to go beyond simple fact-checking. We need to teach people to understand the nature of scientific uncertainty itself. This means helping them grasp that scientific certainty exists on a spectrum, that the most dramatic claims require the most robust evidence, and that marketing language often disguises the difference between correlation and causation. Think of it as teaching people to read the source code of scientific claims, not just the user interface.

The true power of combining these philosophical tools lies in their practical application. When faced with competing explanations for a health outcome, start with the simplest one that fits the evidence. If someone claims their new product "detoxifies" your body, consider whether it's more likely that your liver and kidneys already handle that job effectively. If a treatment promises to "boost your immune system," question whether it's more likely supporting normal immune function rather than enhancing it beyond natural levels.

As we stand on the brink of what may be the most dramatic medical innovations in human history—from gene therapy to artificial intelligence-driven diagnostics—this framework becomes more relevant than ever. The pace of scientific discovery is speeding up, but so too is the spread of misinformation and overblown claims. Perhaps the wisest response is to embrace both the rigor of scientific methodology and the humility to recognize that at the bottom of all our theories, that little rascal of uncertainty is waiting with a grin.

The goal isn't to turn everyone into a scientist, but to help people think more scientifically about the health claims they encounter. In a world where social media influencers can reach larger audiences than medical journals, this kind of critical thinking isn't just an intellectual exercise—it's a survival skill for the information age. By understanding both the historical pattern of scientific reversals and the fundamental playfulness of reality itself, we can build a more sophisticated relationship with knowledge—one that dances with uncertainty rather than trying to pin it down.

In the end, this isn't about fostering cynicism or dismissing innovation. It's about developing a nuanced appreciation for the provisional nature of scientific knowledge while maintaining our ability to act on the best current evidence. It's about understanding that "I don't know" and "more research is needed" aren't just valid scientific positions—they're expressions of harmony with the irreducible rascality at the heart of existence. In a world increasingly driven by dramatic claims and instant solutions, perhaps this combination of historical awareness, logical simplicity, and philosophical playfulness is exactly what we need to navigate the complex landscape of modern medicine and consumer health.

After all, as Watts might say, the universe isn't a problem to be solved, but a reality to be danced with—and sometimes that dance involves tripping over our own certainties.

always curious

Cognitively peripatetic,

Jordan

Learn more about Occam’s Razor and many other Razors.

Small exercises to think differently:

"The 2074 Reflection" Imagine yourself transported 50 years into the future. Write down your three strongest health/medical beliefs today, then envision future doctors reacting to them the way we now react to 1950s doctors recommending cigarettes. What emotions arise when you picture your deeply-held beliefs being thoroughly debunked? (Think surgery, biopsies, toxic medications)

"The Expertise Reversal" Think of a field where you're a complete novice (quantum physics, ancient Sanskrit, etc.). Now, imagine someone from that field is equally naive about your area of expertise. When they make confident but incorrect statements about your field, notice your reaction. Then ask: How often might you be doing the same thing in their field without realizing it?

"The Ancestors' Medicine Cabinet" Pick three common medical treatments you strongly believe in. Imagine explaining them to a top physician from 1850. They respond with equal conviction about bloodletting and humoral theory. Consider: What makes you more right than them? What evidence are you basing your certainty on versus what they based theirs on?

Enthusiastically edited by my AI colleagues Claude and Chad(t).